Better Scientific Writing Prompts

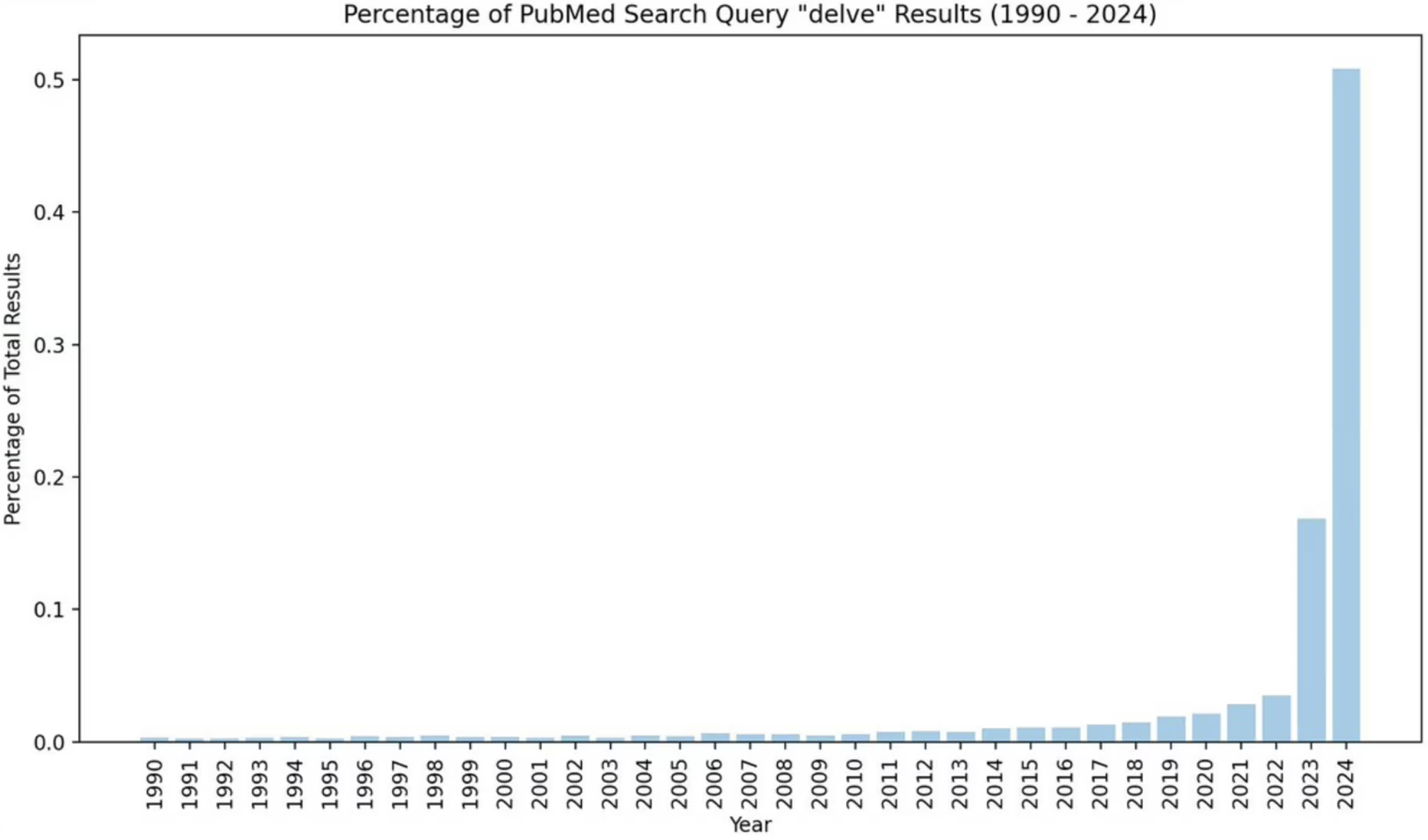

Large Language Models (LLMs) have significantly changed how we write. For instance, until recently, the word “delve” was a clear signal that a text had been generated by ChatGPT. This was traced back to training data curated by a team - many based in Nigeria - where “delve” appeared far more frequently than in American English. Over time, however, this kind of signal has become less reliable, as people have unconsciously adopted LLM writing patterns.

Personally, LLMs have reshaped my workflow. As a non-native English speaker, I use them to improve sentence clarity. I still write the content myself, and use models mainly to clean and polish the output. But I also supervise students who now submit reports heavily reliant on LLMs - often without proper editing. The results are longer, more repetitive documents, filled with vague or empty phrasing. It’s increasingly common to find multiple paragraphs repeating the same idea with only minor word changes.

But I’ve found a small secret weapon to improve my writing - and I secretly hope my students, or others, will come across this post and use it too.

The trick is this: In most of my prompts, I include the phrase “without fluff.” Just those two words often make the output noticeably better - more direct, more precise, and easier to read.

I believe I came up with this on my own, but it’s possible I read something similar before and it stuck with me.

So, I’m sharing this tip here.

It’s important to note that while adding “without fluff” effectively reduces verbosity and improves clarity, it does not address all challenges of AI-generated writing—such as ensuring factual accuracy or deeper logical coherence.

Bonus: it removes excessive praise like “Great question!” or “Interesting point!”.

Examples

To demonstrate how much this changes the result, here are some side-by-side comparisons using a typical scientific writing prompt:

Rewriting

Prompt: But, I found a secret weapon to improve further my writing and I (secretly) hope that my students or other students around the world will find this post and use it.

Without “without fluff”: But along the way, I stumbled upon a surprisingly powerful little secret that has significantly elevated the quality of my writing - and I can’t help but quietly hope that one day, perhaps even by chance, some of my students or aspiring writers around the world will discover this very post and take advantage of it too.

With “without fluff”: But I’ve found a small secret weapon to improve my writing - and I secretly hope my students, or others, will come across this post and use it too.

Text summarization

Prompt: “Summarize the contribution of the paper in one paragraph.”

Without “without fluff”: The paper delves into an exploration of recent advances in transformer architectures, particularly focusing on their applicability to natural language understanding tasks. It offers a comprehensive overview of the model design, training strategies, and benchmark results, showcasing its promising potential in improving downstream performance. The authors highlight the novelty of their contribution while emphasizing the implications for future research.

With “without fluff”: The paper introduces a modified transformer architecture for natural language tasks. It details the model design, training method, and benchmark results. The main contribution is a performance improvement on standard datasets using fewer parameters.

Enjoy Reading This Article?

Here are some more articles you might like to read next: